Testing is Not Enough: Transforming Data Quality with Write, Audit, Publish using SDF Build

Before we had frameworks like SDF and dbt, data professionals relied on ancient relics of the past known as "stored procedures" buried deep in unversioned cloud warehouses. These managed their pipeline’s SQL, but often lacked business logic enforcement or validations. The rise of data testing within frameworks like dbt brought data quality into the mainstream. However, while dbt made testing more accessible, the way tests are currently executed remains a significant weak spot in many data workflows.

Most testing frameworks today only validate data after it has been written to production. This might sound logical—after all, isn't that when you'd see the results of your transformations? But, consider what happens when a test fails. Downstream queries, dashboards, and models start consuming incorrect data almost immediately. In the best-case scenario, you catch the issue before it propagates too far - maybe the next query in the DAG fails or a dashboard goes down. In the worst-case scenario, a critical business decision is made based on faulty data—a costly mistake.

Inevitable business logic issues combined with a reactive approach to testing erodes trust between data teams and the business consumers who rely on accurate insights. Enter the Write, Audit, Publish paradigm - a game-changer in ensuring data quality before it hits production.

What is Write, Audit, Publish (WAP)?

Well, it’s certainly not a reference to a Cardi B song. Inspired by best practices in software engineering, Write, Audit, Publish (WAP) is a "blue-green deployment" for data pipelines. Instead of immediately overwriting production data, a typical WAP paradigm stages updates in a table, runs tests against this staged data, and only promotes it to production if all tests pass. The process ensures that no erroneous data ever reaches production, avoiding unnecessary downtime or downstream impact.

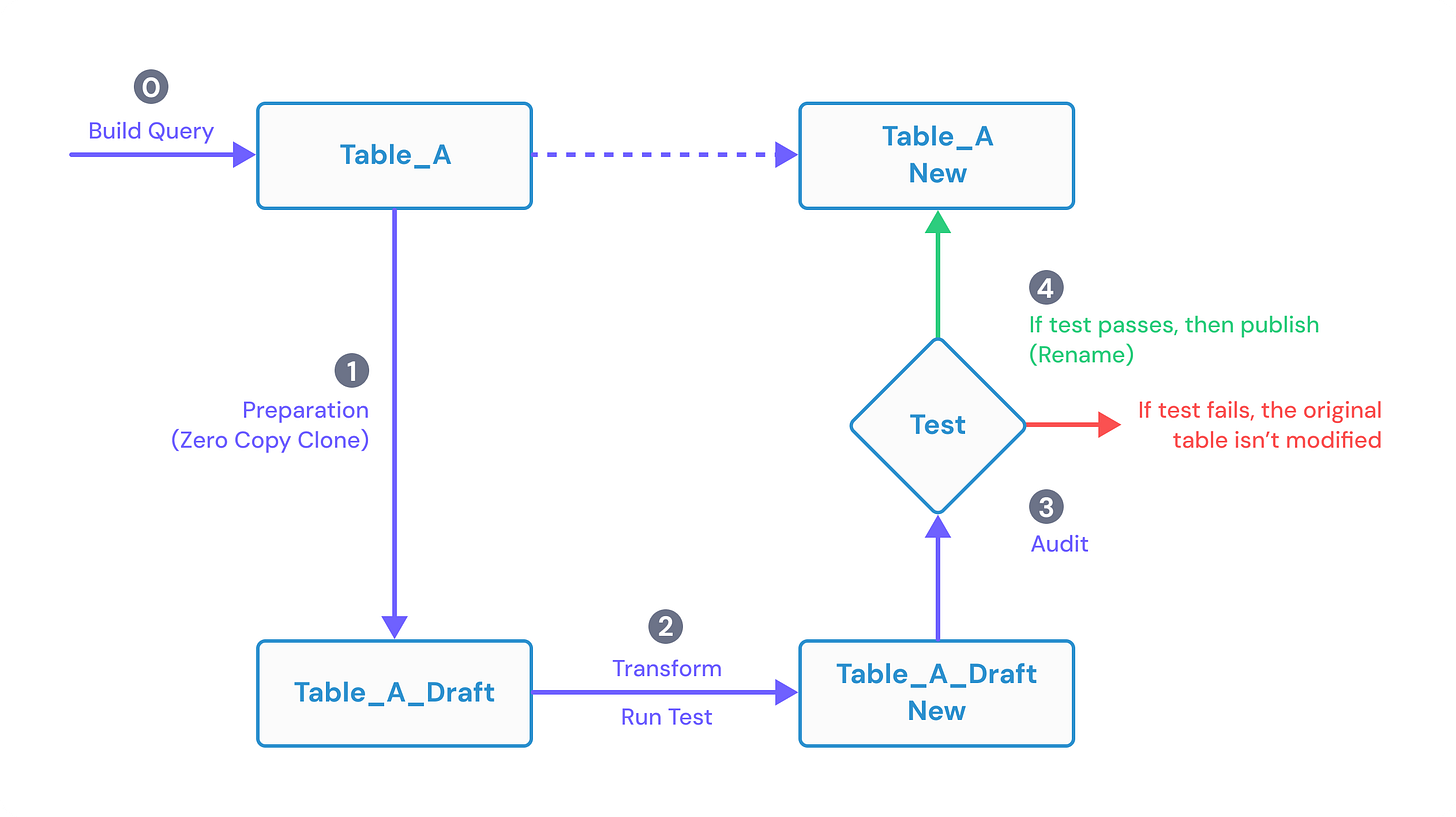

Here’s a breakdown of how it works:

Write: The transformation runs and stages its results into a temporary non-production table.

Audit: Data quality tests are executed and the results are audited in order to identify and rectify any issues.

Publish: If tests pass and issues are resolved, the non-production table is promoted to production (ideally in a way that doesn't recompute the results). This ensures atomicity and avoids rerunning computations.

By decoupling testing from production updates, WAP empowers teams to build trust in their data pipelines without the constant fear of breaking downstream systems.

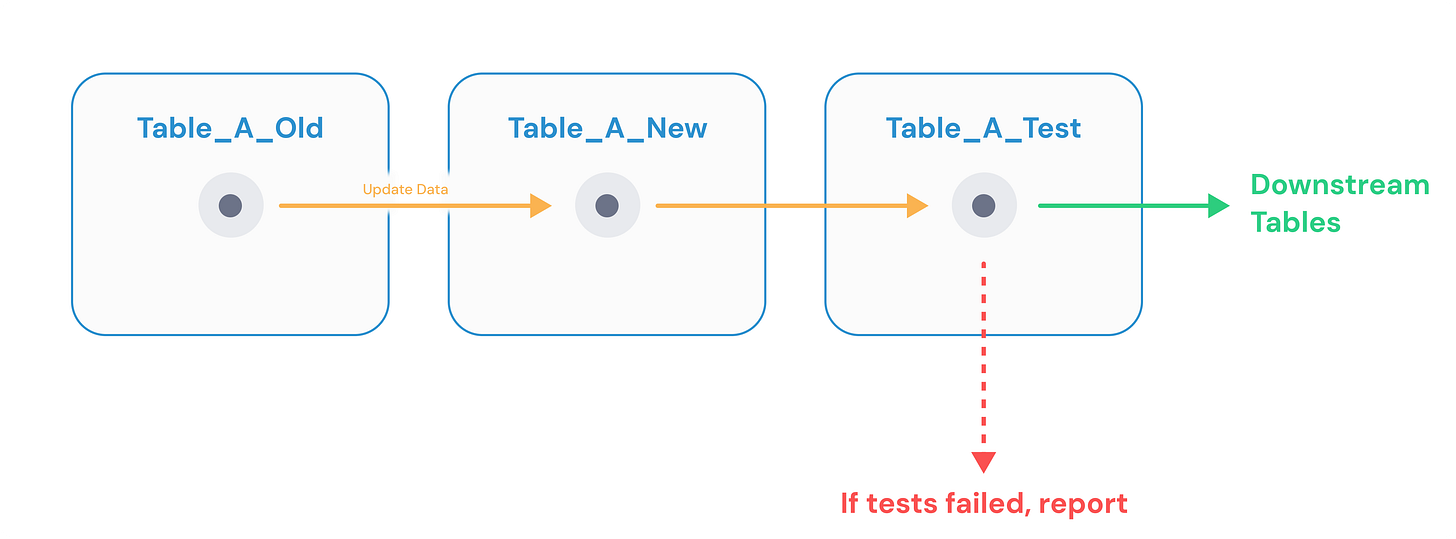

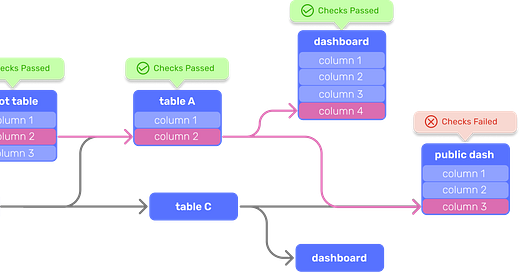

A traditional pipeline execution with data quality tests might look something like this, where tests are executed after the model’s data has already been updated.

Critically, this means downstream tables would still run even if the tests failed, resulting in faulty data all the way down to the data consumer be it a dashboard or embedded application.

With WAP, updating Table_A would look something more like this, where table A is first temporarily staged, then only updated in production if all tests pass.

From Paradigm to Reality: Meet sdf build

As of version 0.10.7-p, SDF introduces a new command that abstracts the Write, Audit, Publish workflow: sdf build. With this feature, teams can seamlessly adopt WAP without worrying about the underlying mechanics.

The simplicity of sdf build lies in its ability to:

Automatically stage data transformations with a _draft suffix.

Run all configured data tests on the staged data.

Publish validated data to production without rerunning it. This is done through

ALTERstatements that rename the draft table and drop it in a transaction.

It also works out-of-the-box with complex materialization strategies like incremental models and snapshots, so the same semantics and optimized computation are preserved between sdf run and sdf build.

Think of sdf build as a bridge between the testing revolution dbt started and the operational excellence software engineering has perfected. It brings predictability, reliability, and trust to your data workflows—all in one intuitive command.

SDF build is available as of preview release 0.10.7-p and works on native SDF workspaces in Snowflake and BigQuery. Support for running sdf build on dbt projects and models is coming soon.

The Business Value of SDF Build

Implementing WAP with sdf build doesn’t just improve data quality—it drives measurable business outcomes:

Reduced Dashboard Downtime: Dashboards reflect only accurate data, minimizing disruption to decision-making processes.

Lower Maintenance Costs: By catching issues before production, the need for backfilling data or debugging pipeline failures is significantly reduced.

Increased ROI on Developer Spend: With less time spent fixing broken pipelines and more time focused on value-add projects, developer productivity soars.

Save Compute on Bad Data: By short circuiting pipelines with poor data quality, unnecessary compute that would have produced unusable data is now skipped.

Consider the impact on a team’s overall efficiency. With fewer firefights and improved reliability, data engineers can redirect their focus to innovation and data consumers can trust the data they’re seeing.

Case Study: Accelerating Data Pipelines in Health Insurance

A large health insurance company had previously implemented its own version of Write, Audit, Publish using dbt. While effective, their custom solution required constant maintenance due to extra configuration in their orchestrator and came with a significant performance overhead.

After migrating to sdf build, they achieved an 80% efficiency increase in one of their critical DAGs, reducing execution time from 22 minutes+ to just 4 minutes and 30 seconds. This dramatic improvement was enabled by SDF’s runtime parallelism and low overhead, thanks to its Rust-based architecture.

Moreover, all of this was accomplished without additional engineering effort. The clean abstraction of sdf build eliminated the need to maintain their homegrown WAP solution (thousands of error prone lines of Python), freeing up their team to focus on higher-value initiatives.

The End of Fragile Pipelines

Testing alone was a great first step, but testing in isolation isn’t enough to ensure trust in your data. Write, Audit, Publish offers a proactive, resilient approach to data quality, and with sdf build, it’s never been easier to integrate this paradigm into your workflows.