Overview

This is the second of a series of posts detailing our experience building a fully Rust based SQL compiler, type system, and data build system called SDF. While Part 1 was a breakdown of the higher level philosophy we’ve taken while building, we’ll now jump into the details and talk through some of our more specific architectural and developer tooling choices.

So without further ado welcome to part two, where we cover:

Developer Productivity and Hardware Considerations

Lock-Free Parallelism

Allocator Benchmarks

Improve the Inner Loop of Rust Development

Software development productivity is most often about iteration speed. Common practice is to focus on ‘agile software development’, ‘cloud based solutions’, and ‘finding 10x developers’ so that every engineer gets more done, faster. We believe productivity is compounding; it is with a good foundation and small but consistent improvements that we unlock long-term benefits. This is especially so with Rust.

Don’t Skimp on Developer Hardware

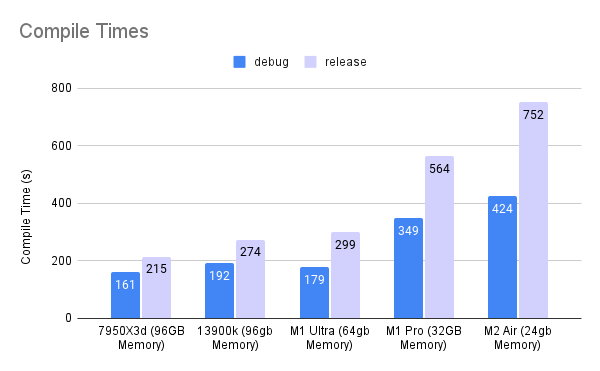

It’s no secret that Rust is resource intensive. It generates vast amounts of intermediates during compilation to hydrate the build-cache. The build cache consumes disk space to power faster incremental builds. But this combination of resource intensive compilation and heavy cache usage means that it’s easily worth it to invest in powerful workstations with fast IO for all engineers.

For a startup, time is the most valuable resource. Hardware is cheap in comparison. Therefore, we radically focus on minimizing edit, compile, test cycles. The below shows the massive difference of compile times on various hardware (note SDF is currently ~1000 crates). SDF’s internal parallelized testing infrastructure consumes 60+GB of memory and fully utilizes all cores.

Invest in CI Infrastructure that Mimics Customer Hardware

Initially, all engineers worked on M based Macbook Pros with a single CI runner for builds. But production customer deployments were on Linux distributions with 64 core X86 machines. For a long time we were flying blind not recognizing how various memory allocators perform under different workloads and different hardware environments (see below). We left performance on the table for months.

So, if you want to have full visibility into how your Rust product behaves on diverse hardware, you have to have a diverse CI infrastructure.

SDF’s native targets are:

Mac:

x86_64-apple-darwin&aarch64-apple-darwinLinux:

aarch64-unknown-linux-musl&x86_64-unknown-linux-muslWindows:

x86_64-pc-windows-gnu

Today, our CI/CD tests on all supported targets on every PR, uses a build-cache to avoid rebuilding imported crates, and has runners self-hosted with GitHub Actions. A build & test run in CI/CD takes <15 minutes and provides safety at scale and across binaries.

Parallelism & Threading Models

Although there’s a lot of discussion about thread-safety in Rust, strategies for efficient parallelism are not frequently given much thought. It makes sense. In modern software development, it is frequently more time effective to just use any one of a number of higher level libraries that abstracts away complexities (in Rust, Rayon is one of our favorites, due to its simple and intuitive API. Unfortunately for async code Tokio is still required, which is a lot more complicated to use). While we do utilize libraries, we’ve found that Rust’s vaunted thread-safety lends itself incredibly well to highly optimized, lock-free parallel software architecture.

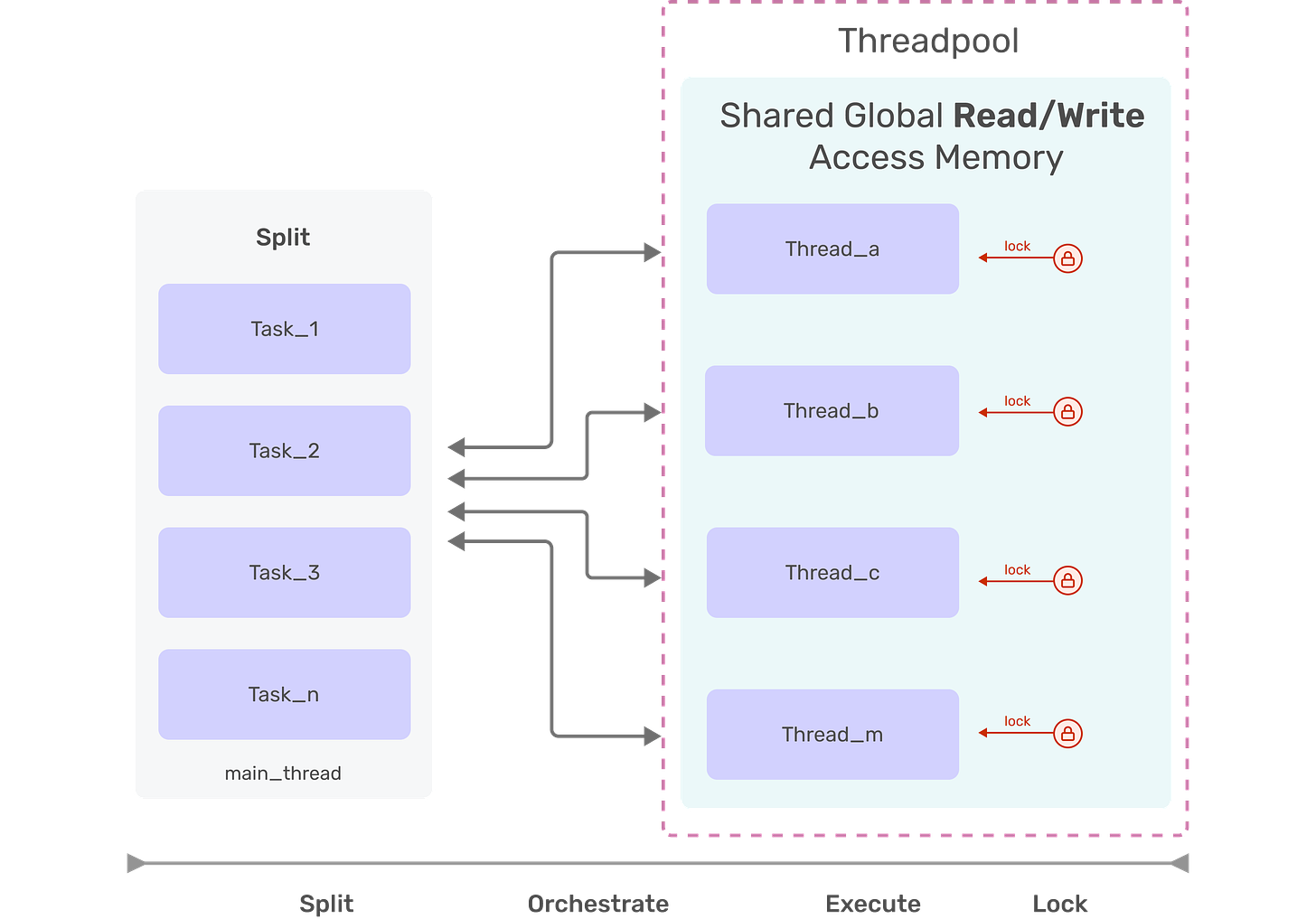

Traditional Threading Models

Generally, it’s understood that copying memory from thread to thread is most expensive, and it’s better to opt for a ‘message passing’ strategy. When one thread is done with a chunk of work, and that work is written into memory, this result is passed to the next thread, or returned to the ‘factory’. Using mutexes we guarantee that only a single thread has access to a single chunk of memory at any given time.

However, this lock/free strategy has some problems. Any thread waiting on a locked chunk of memory can not do work.1 And, the more threads you have, the more coordinating of locks and frees you have to do. As a result, performance with lock/free architectures often does not scale linearly with core-count.

Lock Free Parallelism in SDF

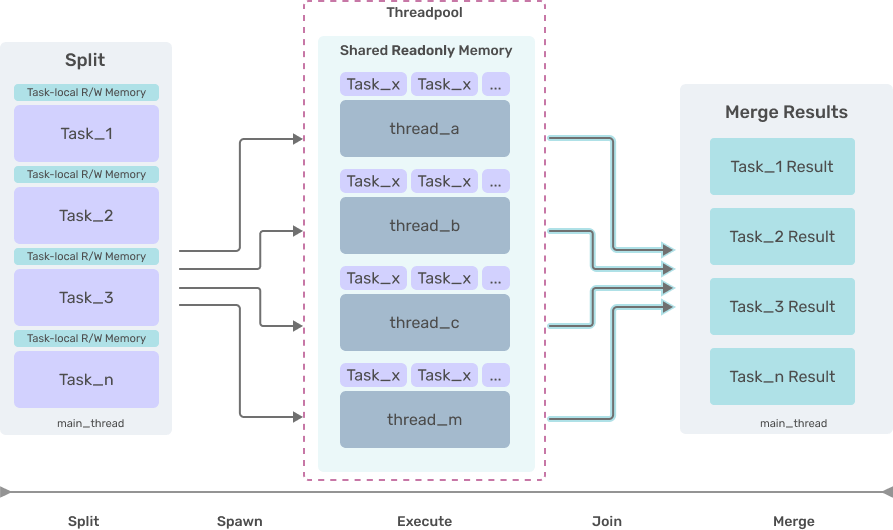

As a compiler and database engine, our goal was to scale to high core-count architectures. To maximize performance we designed completely lock free implementations of various algorithms, including topological sort. When we have some work to be executed in parallel we set up an environment of shared read-only memory, and provide each thread with a small store of writeable memory that is used to contain the result.

The steps:

Split - Let’s start with a map of jobs we need to execute. We provision the global shared memory that all jobs can read from. We also provision per-job writeable memory (including an IO buffer for any potential output). This is where our work will happen and solutions will be placed.

Spawn - Using Tokio as our runtime, we spawn an async task for each job, which has access to both the shared readonly memory, and the per-job writeable memory.

Execute - Concurrently execute all tasks in the threadpool. In the case of DAGs and reading files in directory structures we can execute tasks in waves.

Join & Merge - We aggregate the per-job memory (now filled with our solutions) in our main thread to produce our final result. Console UI output is collected per thread and displayed in standard IO.

With this architecture, there is a tiny penalty in the split phase to provision all necessary elements and allocate new memory for every thread/task. But, we reap rewards in the execute phase since no thread is ever prevented from doing work because of a lock held by another thread.

As a result of this design, SDF’s code compilation scales almost linearly with core count.

Allocator Benchmarking

After releasing Part 1, one of the larger points of discussion was around Jemalloc, and allocator performance in general. One comment from Reddit user u/ssokolow:

Don't stop at Jemalloc. Experiment.

So of course we had to. Results from popular and default allocators below, all normalized to the same machine (16 cores, 96GB ram). Notice that sdf compile (code compilation & static analysis) has much more variance than sdf run (SQL statement execution). Why?

Looking at the object tree, compiling code creates many more small objects. Code compile starts with 16,000+ SQL file objects whereas run deals with only ~10 data files and the 22 SQL statements to execute. Said differently - compile is IO heavy while run is compute heavy. As such, the allocator has to do much more work during compile.

We have not yet looked into how the alloc/free strategies differ between allocators. That will have to wait for another post.

Closing Thoughts

Building performant software products requires performant engineering infrastructure that minimizes the time developers spend waiting on automated processes like compilation and testing.

Some points in this post might seem obvious (faster machines = better) but until working with Rust, we hadn’t experienced an ecosystem that was so dependent on great hardware for a great development experience.

Similarly, while we’ve been designing parallel software for decades (SDF’s CTO was the architect of Microsoft’s Task Parallel Library for .NET), seeing Rust’s built-in guarantees lend themselves to highly scalable threading models has been inspiring.

Tl;dr: Maybe Ballmer had it right all along, it’s all about - “developers Developers DeVeLoPeRs DEVELOPERS.”

Interested in contributing to this series? If you have any other tips, tricks, or resources to share, please do so in the comments below. Or, if you’re thinking of adopting Rust for your project, we’d be happy to talk, share our experiences, and grow the ecosystem.

Tokio does provide an async version of Mutex, which does not block the calling thread. However, as explained in the doc it has higher overhead compared to the ordinary blocking Mutex, and as such is probably ill-suited for tight CPU-bound critical sections.