How we got here

SQL is the de facto language of data, ranked the third most popular development language (in front of Python) in Stackoverflow’s most recent 2023 survey.1 SQL is the industry standard in data transformation, but the language’s age, stratification, and decades of imperfect decisions leave it insufficient to meet the needs of the Modern Data Stack. Ultimately, all of us are relying on a language that lacks flexibility, testability, and composability.

So - what improvements can be made to the SQL language in the near future?

After 50+ years of stratification, SQL is also many things to many people. Different flavors of SQL have been developed to address different data use cases. What started as a language purely for transactional databases has evolved to support streaming data and batched data, structured and unstructured data, reading and writing dozens of different data formats. SQL engines run on your phone and are distributed over 1000s of nodes in the cloud.

But despite its success, SQL is missing many of the tools and features that greatly benefit software development. The growing interest in ‘shift left’ data development is evidence that people are waking up to the fact that data development has to get better. The world of data needs a true type system, more powerful compilers, and reusability.

To understand how we got here and why SQL is so fragmented, let’s take a tour of SQL’s history. There are several epochs in SQL’s evolution.2

Phase 0 | Defining SQL

1970 - 1980

In 1970, Edgar Codd published a now famous paper “A Relational Model of Data for Large Shared Data Banks” (still a great read) describing relational algebra for database systems.3

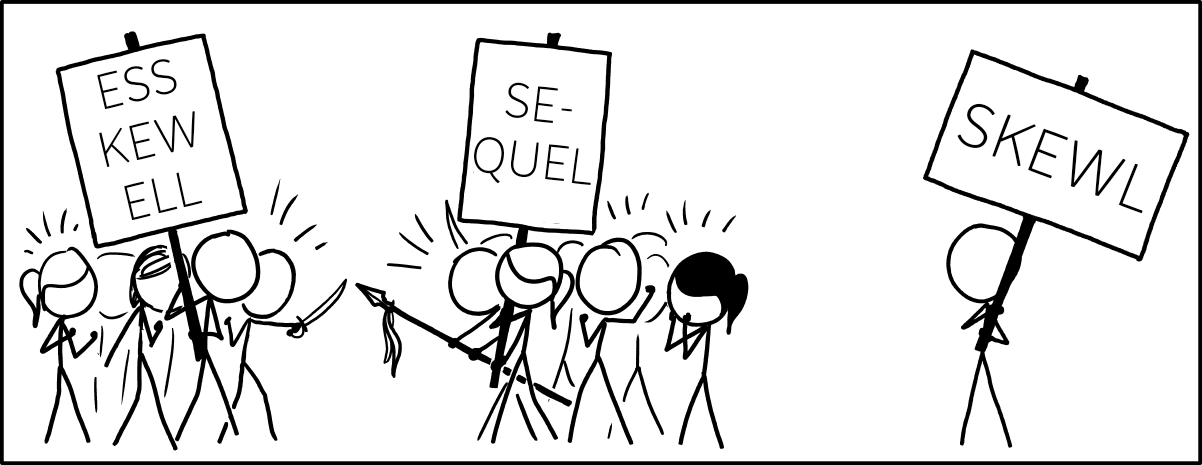

Two IBM engineers then created the first language implementation, initially called SEQUEL (Structured English QUEry Language). SEQUEL aimed to provide a high-level, English-like language for querying and manipulating data stored in the relational model, although even that didn’t adhere strictly to Codd’s relational model.4

SQL has a few interesting characteristics. First, SQL is declarative rather than imperative (like C, Python, or Rust). When you write a SQL query to retrieve data from a database, you describe the data you want to retrieve rather than detailing how the database engine should perform the retrieval.

SQL is composed of four language subsets, some of which are now more widely used than others

Data Definition Language (DDL) - Defines tables (e.g

CREATE TABLEstatements)Data Manipulation Language (DML) - Alters tables (e.g

INSERTstatements)Data Query Language (DQL) - Defines queries (e.g

SELECTstatements)Data Control Language (DCL) - Defines access policies (e.g

GRANTstatements)

IBM soon found competition from other vendors with their own dialects and features. Oracle joined the fray, along with Ingres, and Teradata (yes, that Teradata!).

Phase 1 | Online Transaction Processing (OLTP)

1980 - 2000

Recognizing the need for standardization, ANSI (American National Standards Institute) formed a committee in 1981 to create a standard SQL specification. This effort resulted in the SQL-86 standard, the first formal definition of the SQL language in 19865.

Despite the valiant push for standardization, IBM, Oracle, MSFT, and other vendors kept competing to add unique features and capabilities. Their goal was to lock in their customers and oftentimes aimed for a lack of portability between systems.

Standardization fights stratification.

SQL databases grow larger. Structured cabling and cheaper networking infrastructure result in companies co-locating many machines together.

The term Data Center is adopted sometime in the late 1990’s.

Phase 2 | Online Analytical Processing (OLAP)

2000 - 2010

At some point in the mid-2000s, the cost of storing historical information dropped lower than the value (or at least perceived value) that could be created from that information.

In 2006, the mathematician Clive Humbly proclaimed that “Data is the New Oil” 6 asserting astutely that data would quickly become the most valuable commodity to many modern businesses. To this day, businesses need scalable solutions to remain in control of their data.

Unstructured log data is collected in huge volumes, but by definition, it is incompatible with StructuredQL we know to date. Companies take more and more frequent snapshots of consistent, structured databases. But, with schema changes over time, these snapshots are not consistent with each other. We need a way to do SQL-like analytical processing across this more loosely defined corpus of data which is often too large for any single computer to handle.

Analytical processes include new concepts and new challenges. While transactional databases are internally consistent, analytical processing by definition requires functionality across schema changes and time.

SQL was popular and easy to understand but did not scale well. So, Google released Hadoop in 2006 with an innovative map-reduce model and distributed file system known as HDFS. But, even though Hadoop can handle scale, it is unintuitive to learn.

To address the shortcomings of Hadoop, Facebook designed and released HIVE in 2010 to provide a SQL-like interface on top of Hadoop and HDFS.

In this decade, SQL engines became much more complex and specialized. In 2002, the first mainstream multi-core processor was launched with Intel’s Pentium 47. For the first time, SQL engines could optimize multiple dimensions, between IO, query execution, logical plan, and physical plan for execution. Known as multi-core execution, could scale vertically much more efficiently. Over Phase 3, this specialization would continue with boutique engines optimized for in-memory processing, time-series data, or redundancy.

Phase 3 | Big Data

2010 - 2019

As data centers grew, multi-core architectures evolved into many-machine architectures. In a 2012 Harvard Business Review article, two technologists proudly declared "Data Scientist: The Sexiest Job of the 21st Century".8

Data science, data analytics, and data engineering began to permeate every aspect of software development. We enter the age where humans generate data, sensors generate data, communications generate data, machines generate data, and even data generates more data.

Different data types have led to purpose built SQL engines that optimize for time series data or memory efficiency or redundancy, or local processing vs cloud processing. Columnar file formats like Orc and Parquet showcase how storage layer optimizations can dramatically improve query times. Parquet became a top level Apache project in 2015.

Around this time, AI began to harness exponential growth in data. 2011 is the year that a Convolutional Neural Network (CNN) reaches superhuman levels of handwriting recognition on the MNIST dataset.9

Phase 4 | Intelligent Data

2019 - Now

We are entering the 4th age of data.

Data is the fuel for AI. AI is increasingly the engine of digital transformation which generates yet more data. By every metric, data, and artificial intelligence are inextricably linked in a synergistic relationship.

But, SQL is showing its age. The ubiquity of data-enabled products has led to pressure from all angles: privacy, governance, scalability, access controls, discoverability, and developer productivity.

Even small companies have thousands of tables for analysis. Large companies have millions. An increasingly complex “Modern Data Stack” tries to make up for shortcomings. Crucially, data tools typically come from platform vendors, each competing to fill a niche need.

Software development has over the last 40 years developed tools and techniques to speed up the inner loop of development. Despite what the bulging modern data stack would have you believe, data development tools have not kept up.

Here’s a list of functionalities software developers expect (or take for granted) and SQL simply does not offer.

SQL Lacks a Type System: Simple logic errors like using the wrong metric or aggregating different currencies can not be caught during development time

SQL Lacks a Build System: In large part, SQL dependencies are managed manually via airflow, or with vendor specific syntax. Compare that to software build systems like cargo which automatically generate dependencies graphs and compilation order.

SQL Lacks Integration Tests: Tools for data tests are commonplace. Data tests are like testing in production. They can only catch bugs after they have shipped. Helpful when you have nothing, but proactive capabilities would be so much more powerful.

SQL Engines Lack Global Optimization: For the last 50 years, SQL engines have been designed to execute only a single statement at a time. Meanwhile, software build systems support global optimizations. With exponentially increasing data volumes and compute costs it is crucial that SQL engines catch up.

SQL Lacks Contextual Language Servers: The prevalence of SQL Dialects, and popular code-generating tools for Jinja and Python, makes it incredibly difficult to create the highly contextual language servers that software engineers typically depend on.

SQL Lacks a Package/Module System: Partially due to the declarative nature of the language, SQL has no package system. There is not even a sharing of UDFs let alone metrics or higher order logic. As a result, every company has to re-invent every metric, every UDF, and every dashboard.

As a result, data developers have of course begun looking into different data manipulation technologies (like dataframe APIs) in other languages.

What Now?

We believe SQL can, and will evolve to power the Intelligent Data age. But, it’s not there yet. There are many tools left to build. Tools with more context, less vendor lock-in, and more flexibility. We predict that commonplace concepts in software like ‘build systems’ and ‘language servers’ and ‘packages’ will find their way into data development.

We’re excited to be a part of that journey and look forward to releasing SDF publicly soon.

For a more detailed history of SQL’s evolution until 2004 read Stonebraker and Hellerstein’s: What goes around comes around.