Understanding the Problem

SQL Runs the World! But.. Which SQL?

Developers look at file extensions to know what they are dealing with: typescript is denoted by .ts, rust by .rs, python by .py, etc.

Unfortunately for SQL writers everywhere, .sql files are not all the same! For historic (sometimes selfish vendor lock-in) reasons SQL has evolved into dialects. SQL dialects vary in all sorts of things: syntax, name resolution, function behavior, nullability, structured types, and many more.

If you only ever use one engine or one dialect, you likely don’t care about these differences. However, most enterprise data developers deal with different engines and their various dialects every day.

For developers this is infuriating. For companies it’s expensive. For engine providers it’s a powerful retention tool 😈.

SQL’s Tower of Babel exists. We can’t ignore it. We can’t hide from it. But we can build a Babel Fish. That’s why we built SDF.

Just How Tall is the Tower?

Syntax

Let’s start with a one liner. Suppose you are given this query q1:

-- query q1

select “Abc” from some_schema.some_table;This query is valid SQL in any dialect, but has a different meaning in BigQuery vs ANSI standard languages like Trino, Spark, and Redshift.

BigQuery uses double quotes to delineate string literals, so executing q1 on BigQuery returns a single row with the string (“Abc”). However according to ANSI SQL, double quotes are for quoting identifiers, so in Trino, “Abc” actually denotes a column in some_table.

You might say this difference is shallow. All you have to do is understand the lexical level and know which SQL dialect you are working with, simple right? But it gets worse.

What’s in a Name?

Let’s look at query q2, which reads from a table called x, which we assume has a single column x of type integer. What does q2 mean?

-- query q2

SELECT x FROM some_schema.some_table as x;The semantics depend on how the engines interpret the three x identifiers that are in scope: Let’s label our three versions of x:

x → the x in the SELECTx’ → the table alias x referenced in the fromx” → the column named x in table some_table

Let’s assume some_table has a column with a single row containing the value 1.

In Trino, as in any ANSI dialect, the

xin theSELECTis a column reference. So Trino will project column x’’ from tablesome_tableand return a columnxof typeintegerwith a single row of value1.In BigQuery the x in the

SELECTis a table reference. So BigQuery returns a column called x with typestruct<x: int>and a single row of value{x:1}.

As we can see, fundamentally different binding rules across dialects mean the same query can return different types and different results!

Not Even Math is Safe

Can’t we at least agree on math? Unfortunately, the Tower of Babel never stops growing. For instance what does q3 mean?

-- query q3

SELECT sum(t.x) FROM some_schema.table t;Let’s execute q3 on Trino and assume that t.x has type decimal(p,s). In Trino the resulting type will be decimal(p,s). This means if two values are summed and the result exceeds scale and precision, an overflow will occur. In Spark the resulting type will be decimal(p + min(10, 31-p), s). The Spark engine automatically increases the precision to avoid overflows. This is great… until you start migrating queries from one engine to another and run into sporadic overflows in Trino.

Structured Types

So far we have focused on subtle but important differences of SQL dialects. It’s worth mentioning the SQL core is largely well behaving, i.e. most queries over simple basic data types using relational operators and fully qualified names behave the same. For instance q2 could have been written as SELECT x.x FROM x; and now BigQuery and Trino agree on the resulting type and value.

But as soon as you step out of this subset and use structured types like arrays or structs and custom types like json or geography, SQL engines and their dialects diverge even more dramatically. Presto and Spark have higher order functions over structured types — most other popular dialects do not support them. Meanwhile BigQuery boasts flattening features for structured types that are unmatched by other SQL engines.

SQL engine optimizers often exploit deep knowledge of the supported types to improve performance. Therefore, once you use non-standard types in your query, you are likely locked in to that engine for good (or bad).

SDF, the Babel Fish

The SQL development experience can be improved with more standardization or better tooling. We are not in the position to force standardization, but we are here to help build data tools that are on par with modern software engineering tools and programming languages like Typescript or Rust.

So what is needed to make SQL a better programming environment?

First, a deep understanding of the existing SQL dialects.

Second, an expressive type system that understands precisely how data flows and how it is being transformed.

Third, modern abstractions and a package system so that we can finally build a robust ecosystem of SQL modules.

Lastly, a faster development loop which exposes logical & syntactic errors during authoring, not execution.

The foundation of the bullets above? A true multi-dialect SQL compiler. This is why we built SDF.

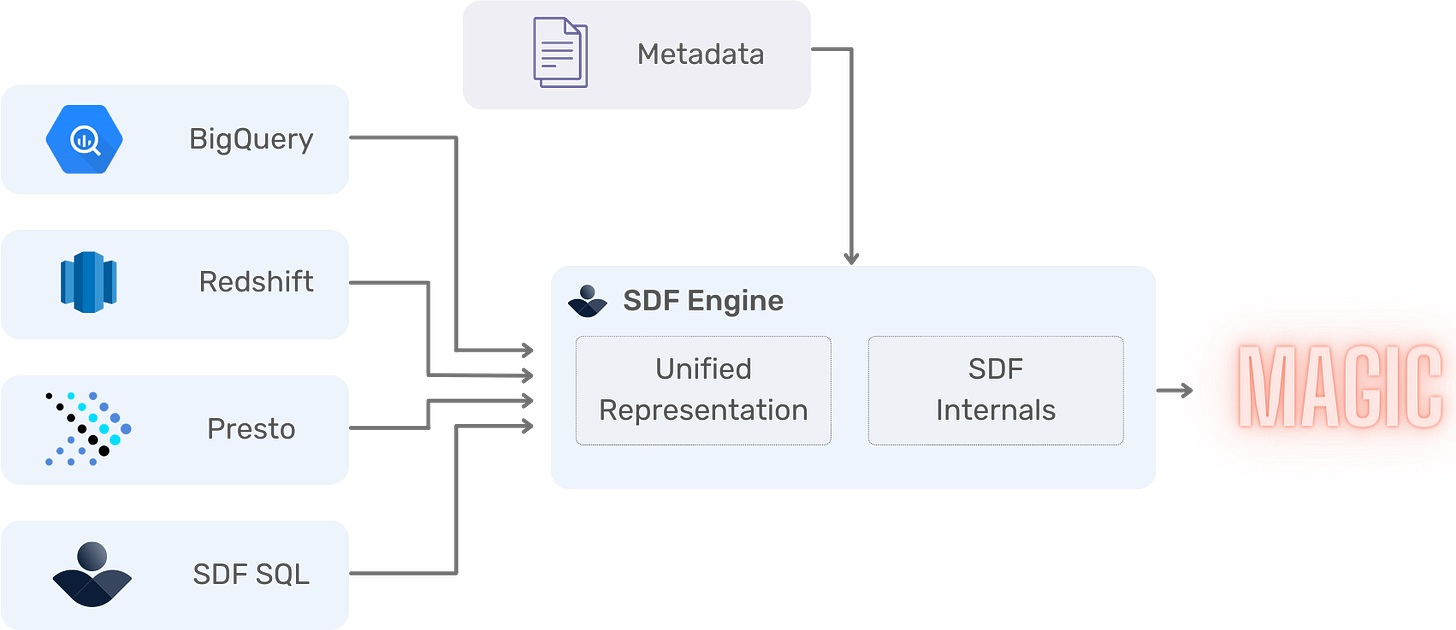

The SDF Compiler Design

SDF uses your existing, unmodified SQL sources and translates them into a series of relational algebra operations (like projection, scan, join, etc.) to create a logical plan. Note that these relational operations are defined completely independent of the SQL language.

SDF uses the logical plan implementation from Apache Datafusion. Here is the representation of q1 as a logical plan.

-- query q1 (again)

select “Abc” from some_schema.some_table;

-- logical plan of q1 from SDF's compilation with Trino dialect

Projection: some_schema.some_table.Abc AS Abc

TableScan: some_schema.some_table

-- logical plan of q1 from SDF's compilation with BigQuery dialect

Projection: Utf8("Abc") AS f0_

TableScan: some_schema.some_tableNote how SDF captures the semantic difference between SQL dialects! Trino’s logical plan for q1 references some_table.Abc. BigQuery’s logical plan, however, shows that “Abc” is just a Utf8 constant, but it still requires to scan the table to produce the constant for each row.

Compiling the different SQL dialects to Datafusion’s logical plan is not straight forward. Besides capturing the difference in syntax, in name resolution and type checking rules, we also tackle the following challenges:

DataFusion operators are more restrictive than their SQL counterparts. So SDF has to generate composite expressions to capture the proper SQL semantics.

Datafusion has a different type system than SQL, namely Apache Arrow. While most SQL types map nicely, others like higher order functions need novel extensions.

DataFusion is missing many SQL functions and operators. So SDF was built to be easily extensible with new functions from various engines.

Summary

Developing SDF required deconstructing the tower of Babel. The result is a transformative SQL engine which captures the precise semantics of each dialect and unifies them to a SQL agnostic logical plan. Since SDF works with existing SQL dialects, onboarding is simple. And SDF doesn’t need data - static analysis alone enables it to provide fast feedback during development about type mismatches or failing checks at warehouse scale.

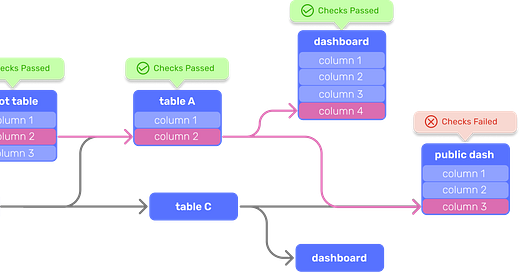

In further blog posts, we will explain how SDF uses the generated logical plan as the basis for a powerful static analysis toolbox with features like precise column level lineage, strong type checks, and protections against data privacy leaks.

Thanks for doing this incredible work! If more db’s supported iso/ansi sql it wouldn’t be such a challenge! I assume we write the sql in ansi/iso form to submit to the tool?