Introducing Impact Analysis: Streamlining Data Pipeline Management

Mitigating Risk, Cutting Costs, and Enhancing Collaboration with Impact Analysis

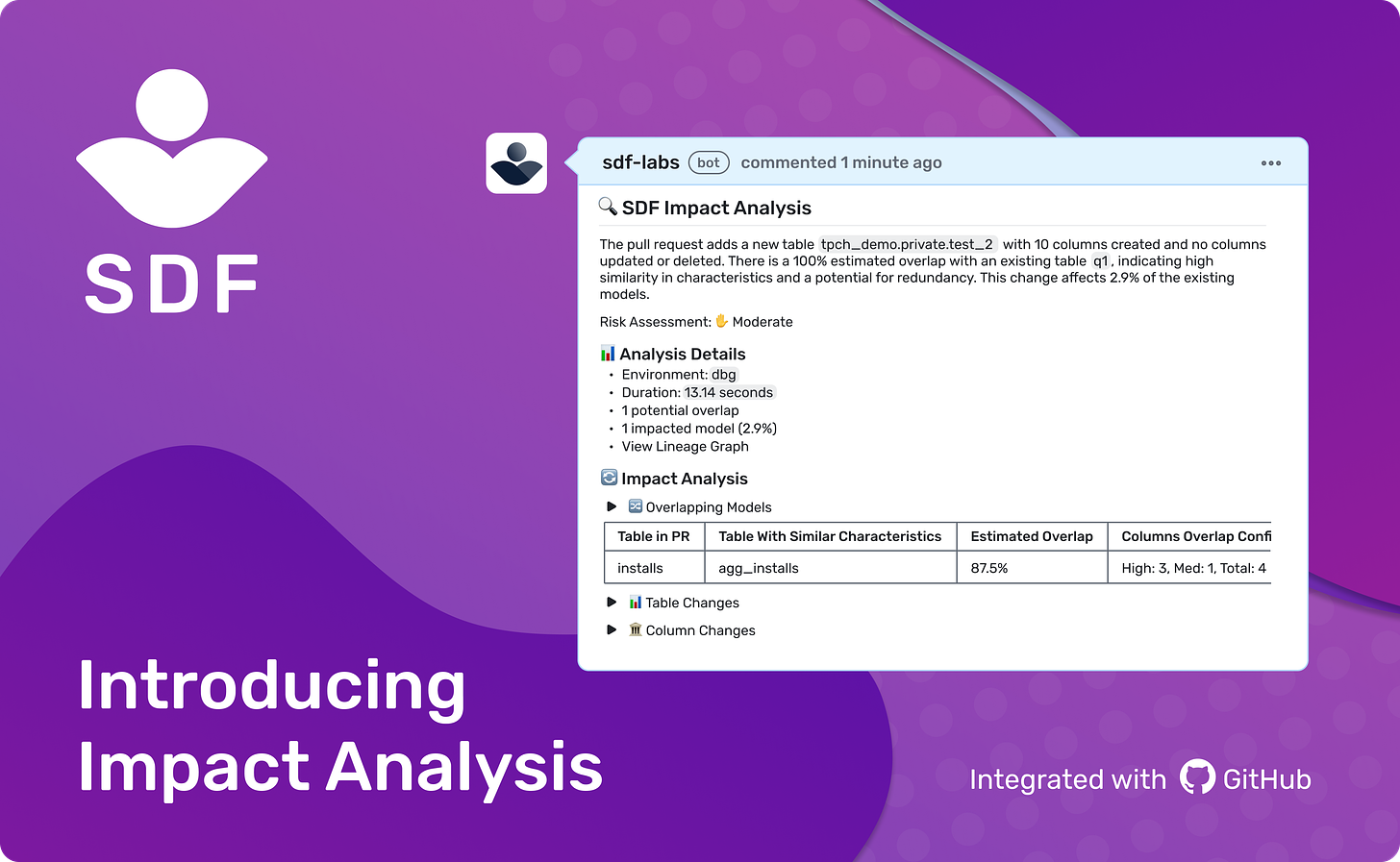

In fast-moving data environments, managing changes across complex pipelines can quickly become a tangled mess of models, especially without the right tools to cut through the chaos. Enter SDF’s column-level Impact Analysis, seamlessly integrated with GitHub, where we’ve transformed what was once a painstaking, labor-intensive process into a smooth, automated operation. No more guesswork or frantic downstream damage control—Impact Analysis empowers your team to understand the ripple effects of data model changes before they hit production.

Synchronization Between Your PRs and SDF Cloud

Impact Analysis is designed to integrate seamlessly into your existing GitHub workflow. No new tools, no steep learning curve. When you open a pull request, SDF Cloud automatically posts a comment detailing the impact of your changes, with direct links to relevant column-level lineage and metadata. You can view how models are connected, where changes have occurred, and make decisions about merging or revising—all within the GitHub interface.

Our GitHub integration ensures your SDF workspace stays in sync with your GitHub repository, allowing your SDF cloud catalog and lineage graphs to reflect the latest code changes automatically. Whether you’re managing branches or multiple workspaces, the integration gives you a direct view of how different versions of your models evolve, all within the SDF Cloud.

The analysis covers modifications to models, their downstream impacts, and key metrics such as table-level and column-level alterations. The aim here is simple: take the first step towards preventing business logic errors by ensuring that no change goes unnoticed before being merged. Bugs with business logic are traditionally hard to catch since they are issues in how the data is being calculated, not in the SQL semantics or syntax. By catching them early on, you are reducing the risk of implementing changes with unintended consequences, thus keeping your data pipelines robust.

Avoid Redundant Work with Model Overlap Analysis

Sometimes data engineers create new queries or tables without realizing that a very similar table already exists, leading to redundant work, unnecessary compute costs, and most importantly, a lack of one source of truth across your data teams. To prevent this, SDF is able to uniquely detect the model overlap, alerting users when a table in their PR closely resembles an existing table. It highlights the table in question, identifies the table with similar characteristics, and assesses the column-level similarity to estimate the model overlap.

This is incredibly valuable for optimizing your data environment. Instead of creating and managing multiple similar tables, which can bloat your warehouse and increase compute and storage costs, data engineers can either modify the existing table or remove the duplicate altogether. This not only keeps the data warehouse lean and efficient but also improves collaboration across teams, ensuring that everyone is using the same, consistent data sets.

Bring Transparency and Speed to Code Reviews

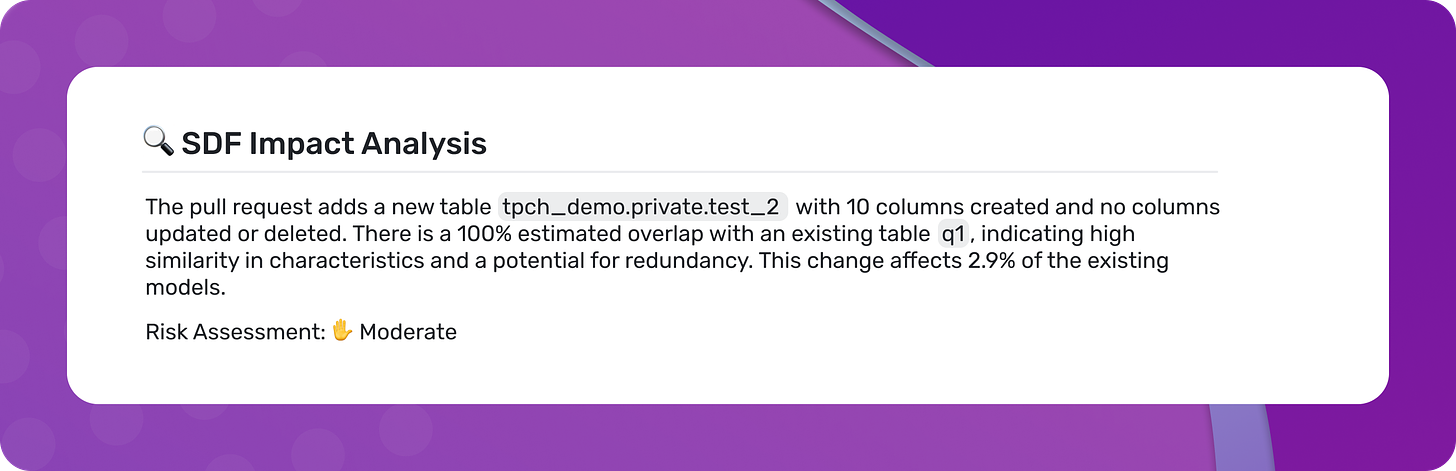

Understanding the effects of data changes is important for every stakeholder, but not everyone reviewing a pull request is focused on the technical intricacies of impact analysis reports. That’s why we’ve introduced AI to automatically summarize the key impacts of each PR in natural language at the top of every Impact Analysis comment. This AI-generated summary distills the intricate details into a few sentences, allowing product managers, business stakeholders, and other team members to easily understand how the changes affect the data warehouse.

This transparency is key to ensuring that everyone involved in the data pipeline is on the same page. Business users, data analysts, and engineers alike benefit from having the full context of changes and their effects on the broader data landscape.

By providing this high-level summary upfront, we ensure that everyone involved in the review process stays informed without needing to dig through technical specifics. Whether it’s assessing potential risks or understanding how downstream models might be impacted, the AI summary allows for faster, more efficient decision-making across the board, keeping reviews clear and on track.

Proactively Mitigate Risk in Data Pipelines

A single change in a data warehouse can create a domino effect, disrupting models and reports downstream. Understanding these potential risks before code is merged is crucial to maintaining data quality and ensuring business continuity. By catching issues earlier in the development process, Impact Analysis helps shift data quality left, allowing teams to address potential problems before they escalate into larger issues later in production.

Instead of waiting for issues to surface post-merge, our GitHub integration automatically triggers a compile on every pull request, ensuring that breaking changes are caught early. This automated compile prevents problematic code from being introduced, giving your team an immediate check on the health of the data models before anything is merged, helping teams avoid costly rollbacks and emergency fixes.

Combined with Impact Analysis, this process goes further by showing the full scope of potential downstream effects. It identifies how changes to your models will impact dependent tables, analytics, and business processes, offering the foresight needed to prevent disruptions before they occur. This proactive approach gives teams the confidence to approve changes, knowing they’ve seen—and mitigated—the risks upfront.

The result? More efficient use of engineering time and a boost in overall team productivity, all while maintaining high data quality and control.

Final Thoughts

In today’s data-driven world, where the complexity of data pipelines continues to grow, tools that automate and simplify the process of managing data models are invaluable. Impact Analysis delivers on three key fronts:

Reducing Risk: By combining impact analysis with automated compilation, you can catch and prevent breaking changes before they reach production, safeguarding your data operations.

Optimizing Costs: Impact Analysis and our compilation check for breaking changes without running anything against your Cloud Compute, reducing the need for staging runs and minimizing data testing costs significantly.

Improving Transparency: Through AI-generated summaries and clear visualizations, non-technical stakeholders can easily understand the impact of incoming changes, promoting alignment across the entire team.

With these benefits, Impact Analysis is designed to streamline data management, boost efficiency, and keep your operations running smoothly. If you’re interested in trying it out, schedule a demo of SDF Cloud today.